Direct Application of Convolutional Neural Network Features to Image Quality Assessment

We take advantage of the popularity of deep convolutional neural networks (CNNs) and have developed a very simple image quality assessment method that rivals state of the art. We show that convolutional layer outputs (deep features) of a CNN compute the local structural information of spatial regions of different sizes in the input image. The learned convolutional kernels contain a much richer set of weights thus capturing much more local structural information than hand crafted ones. As the deep features learned from large datasets already contain very rich multi-resolutional structural image information, they can be directly used to calculate visual distortion of an image and it is not necessary to introduce further complicated computational process. We will present experimental results to demonstrate that this is indeed the case, and that simple cosine distance of the deep features is as good as state the art methods for full reference image quality assessment.

- Although still very difficult to interpret, the deep features extracted from pretrained convolutional neural networks are able to generalize surprisingly well from one task to another under the framework of transfer learning. In addition to high level visual recognition problems, deep features have been also successfully used to solve different image transformation tasks. In particular, perceptual loss functions defined in the deep feature space have been successfully used to measure high-level image similarities. To this end, it is of great interest to investigate why the deep feature can be used to measure high-level image similarities.

- In this paper, we propose the use of learned features to design image quality assessment metrics under deep learning framework. The key insight is the capability of pretrained deep convolutional neural network to incorporate structural and perceptual image information in its hidden features, which can be directly used as an alternative to hand crafted features for the design of image quality measures. Our DFB-IQA index tries to provide a good approximation to perceived image distortion by incorporating image pixel spatial correlation and object structure information, which are implicitly captured by the learned filters through a large-scale image dataset training instead of human engineering.

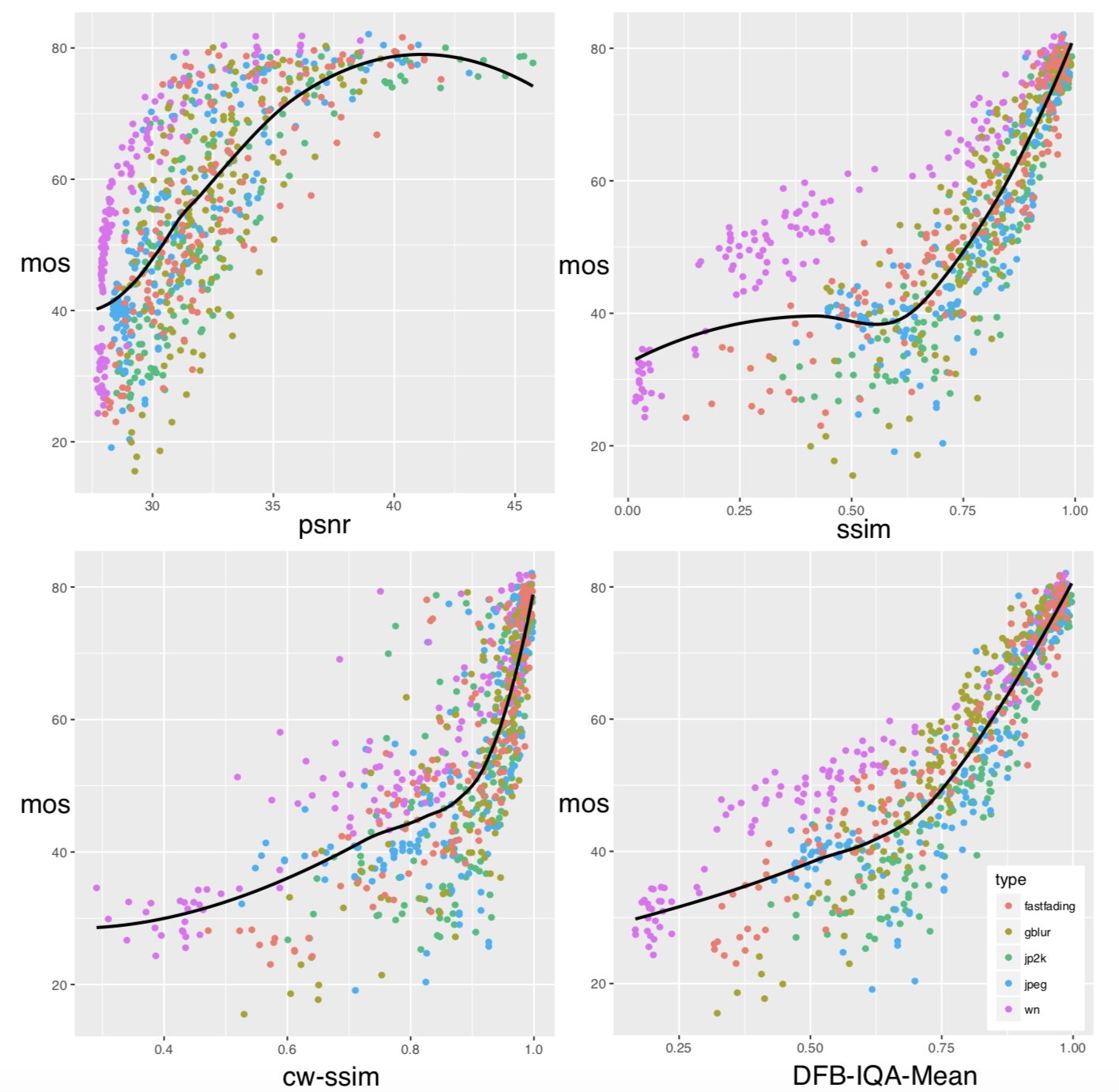

- We conduct experiments to compare the performance of the proposed DFB-IQA index with different image quality assessment methods. We show the correlation map of MOS versus different model predictions with different types of distortions in Figure 1. we can see that the proposed DFB-IQA performs quite well in this test and behaves consistently with MOS. Though SSIM performs well for a single type of distortion, it cannot generalize well for cross-distortion testing. On the contrary, the scatter plot of DFB-IQA index is more compact, demonstrating that it provides remarkably good prediction of the mean opinion scores.

- Reference

Xianxu Hou, Ke Sun, Bozhi Liu, Yuanhao Gong, Jonathan GariBaldi and Guoping Qiu. “Direct Application of Convolutional Nerual Network Features to Image Quality Assessment”, VCIP 2018.

Figure 1 Scatter plot of MOS v.s. different image quality indexes