Abstract

Recently, computational models based on deep neural networks have made impressive progress in predicting the visual saliency of human beings. Relying on the powerful capability of some pre-trained networks, various models can extract diverse deep features. However, they are unaware of the problem of feature selection and reweighting when predicting saliency. This situation gives rise to features describing scene distractors potentially also contributing to the saliency maps. In this paper, we propose a feature selection and reweighting module (FSRM) for deep saliency prediction models. Through the FSRM, we wish to highlight the saliency-related features in a manner similar to channel attention and simultaneously exclude distractor features by reducing the channel number of deep features. Specifically, in the FSRM, we obtain an importance descriptor of feature channels, where some saliency knowledge, including the center prior and rarity, is encoded. Furthermore, the number of feature channels is reduced via a transformation matrix derived from the importance descriptor. To predict the saliency, the FSRM is embedded in a hierarchical fusion network that makes use of multi-level features. Experiments and ablation studies show the effectiveness and generalization capability of the FSRM in the saliency prediction.

More details about the work:

1. Detail of the proposed model.

2. Details about the generation of two dimension Gaussian map G.

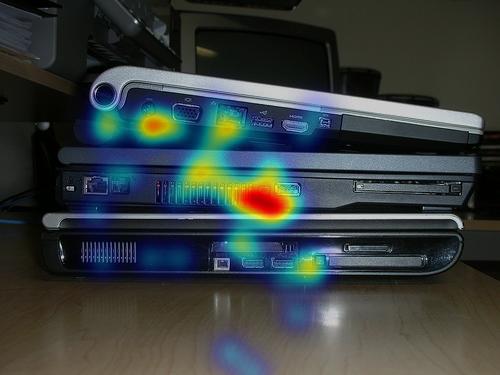

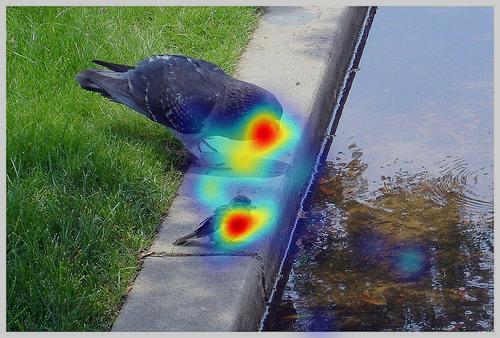

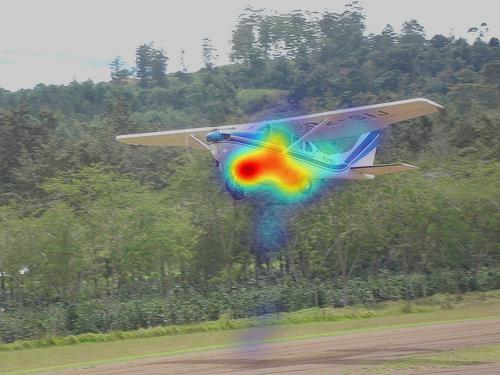

3. More visual comparison between the EHK model and the proposed one.

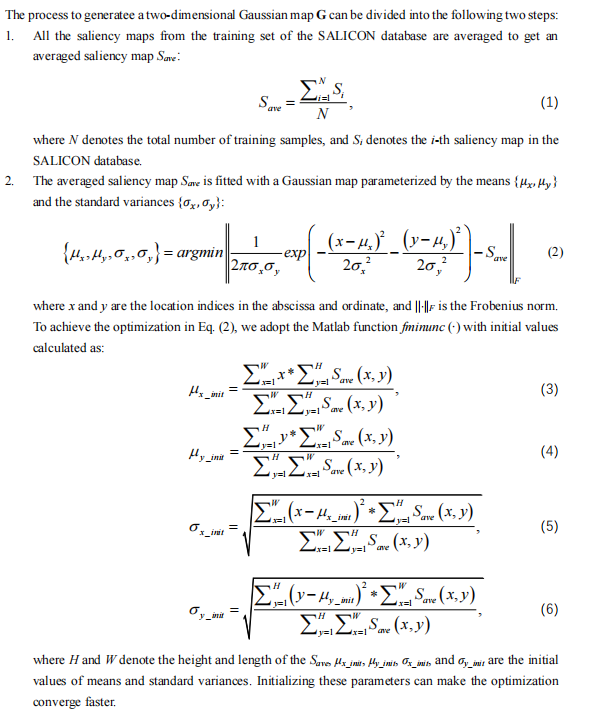

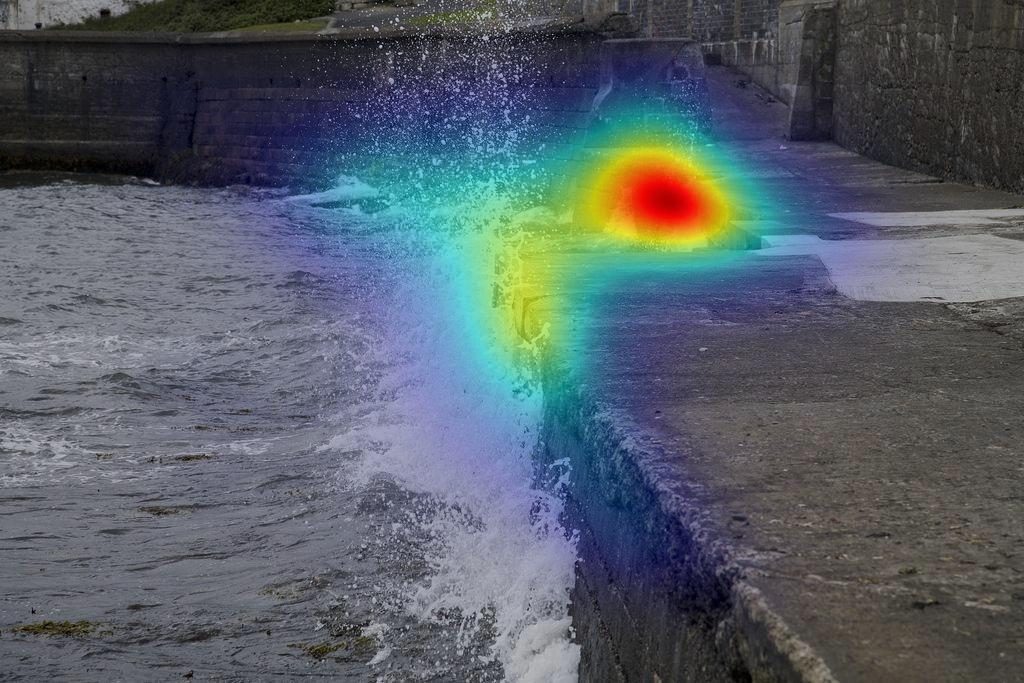

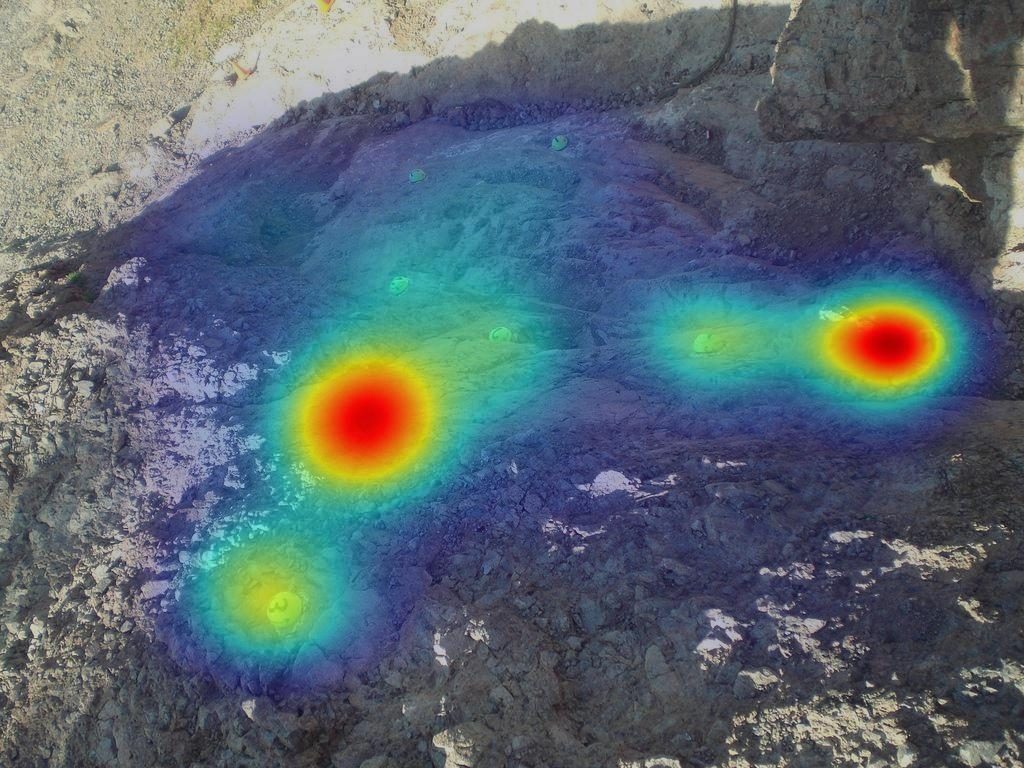

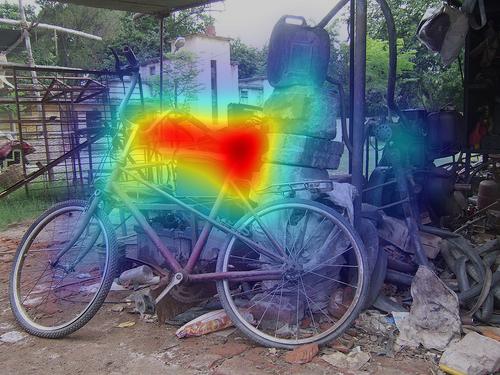

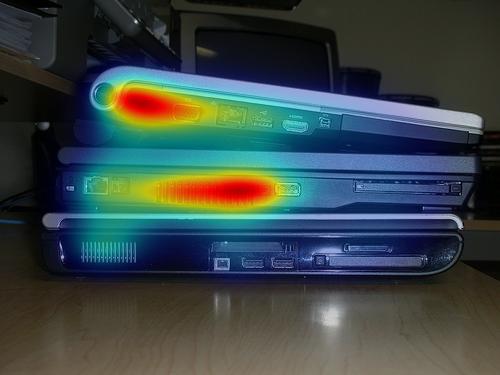

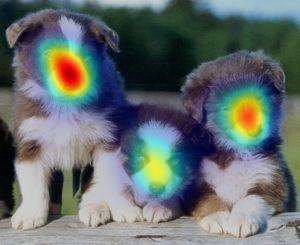

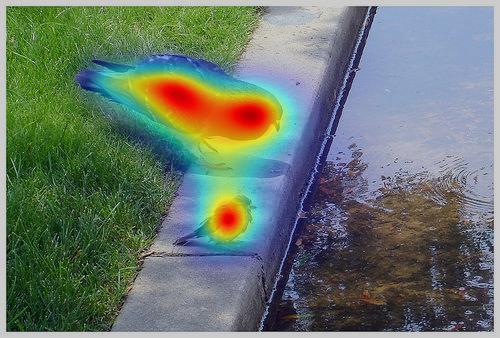

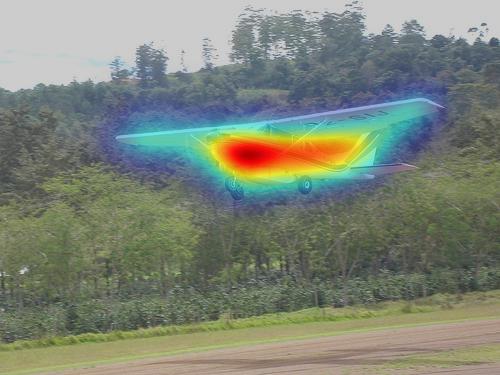

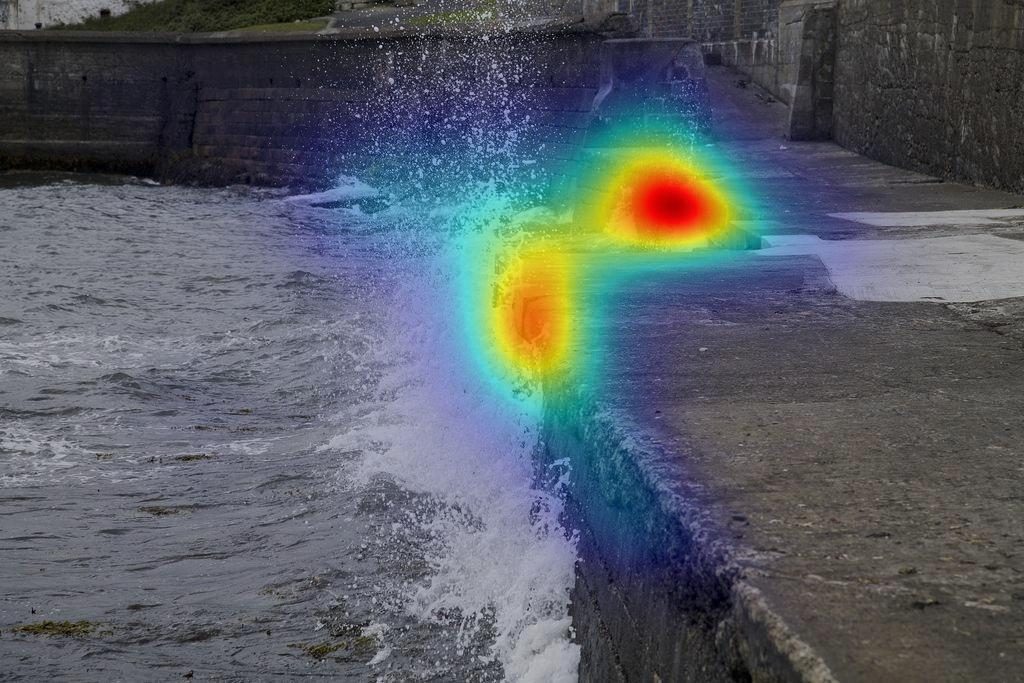

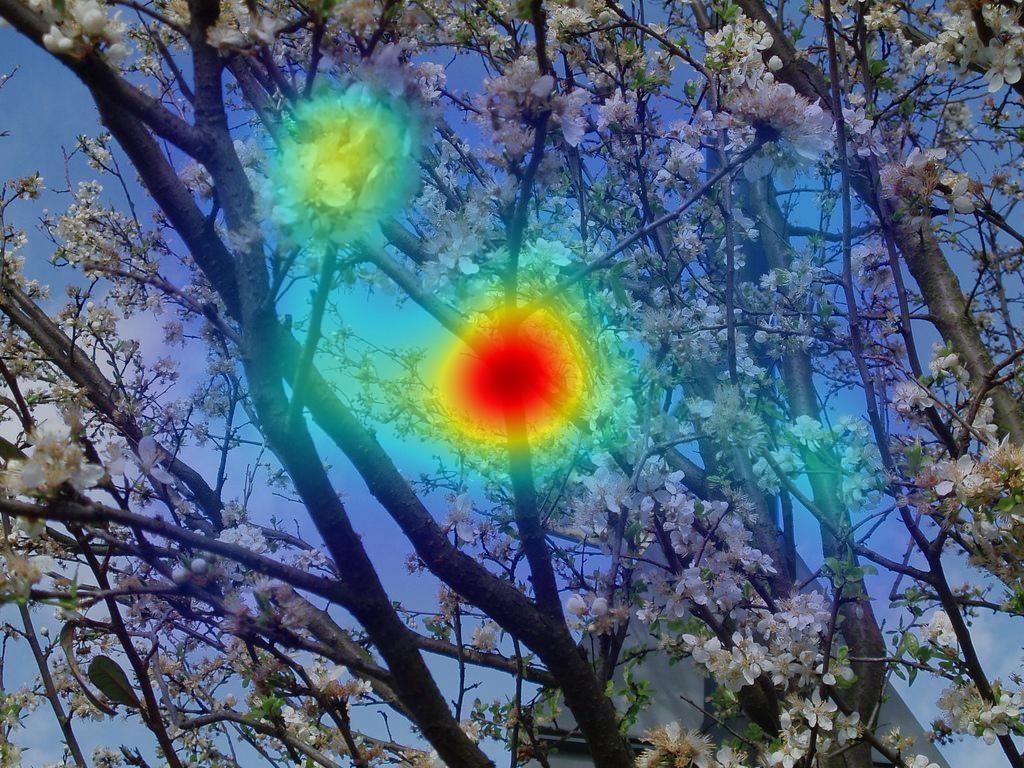

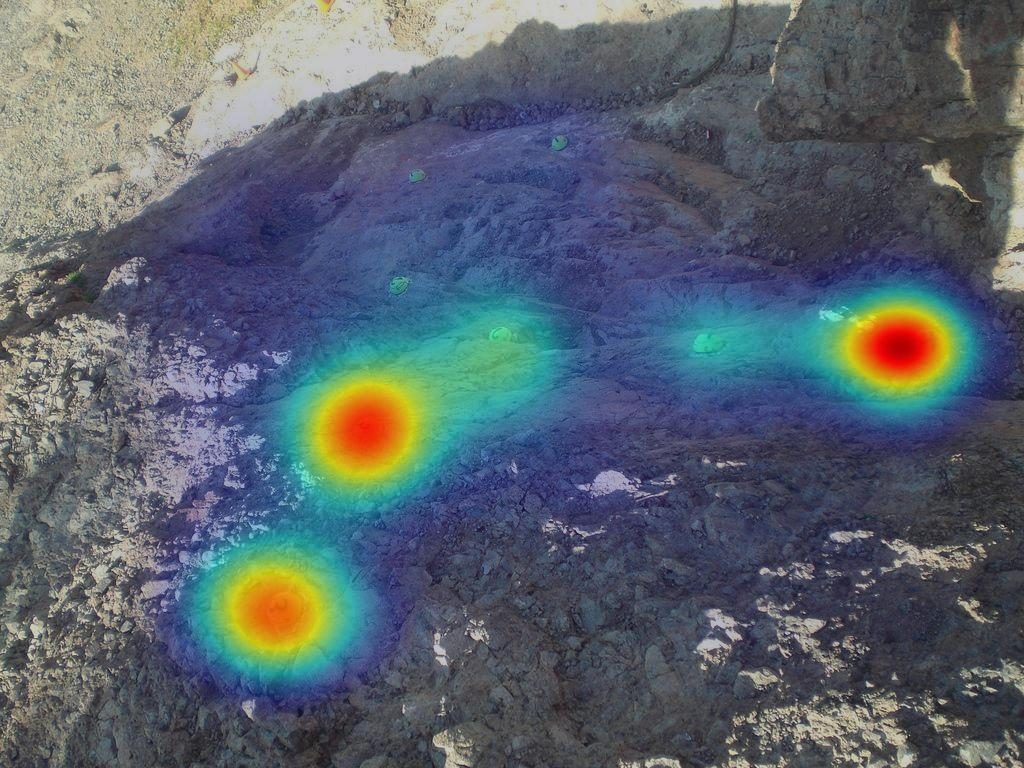

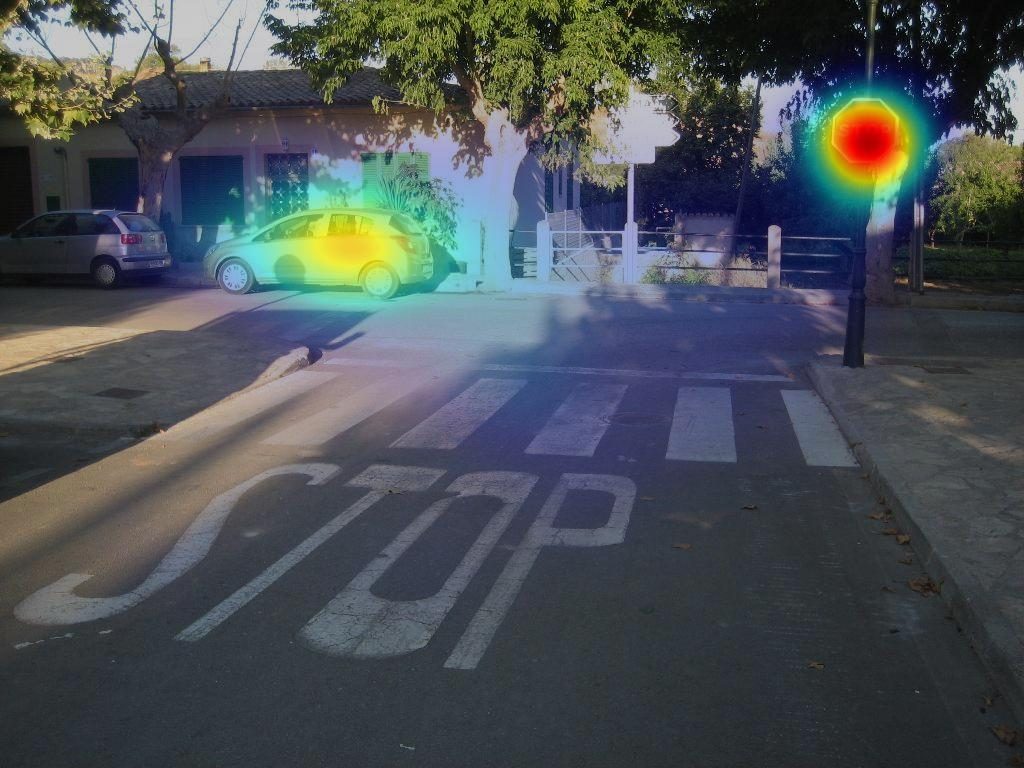

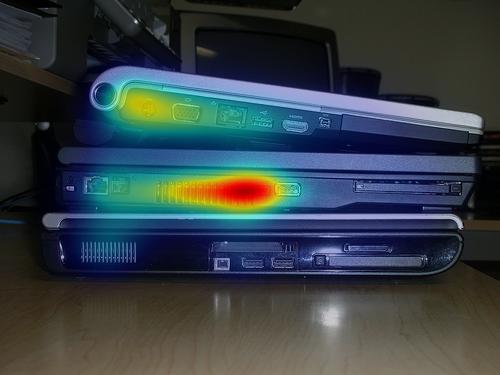

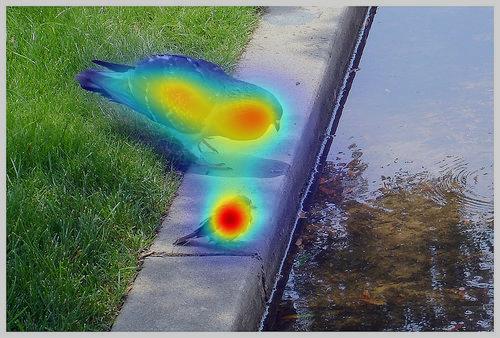

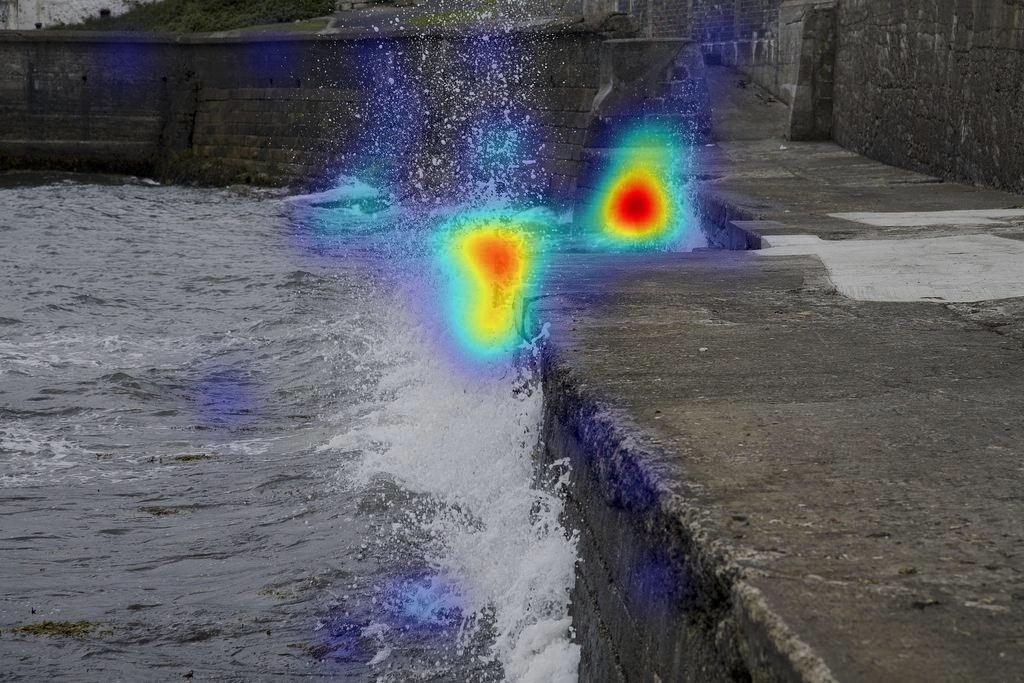

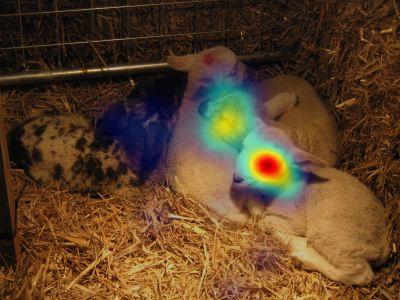

(a) Input image

(b) EHK

(c) Proposed

(d) Groundtruth

Fig. II. More visual comparisons of the EHK model and the proposed model. The inputs of the 1st-4th rows are from MIT1003 and the inputs of the 5th-15th rows are from PASCAL-S.

In Fig. II, we can observe that the results of the proposed method are better than the result of EHK model in different ways: assigning proper priorities to different saliency regions in the 1st, 3th,5th,8th,11th, and 12th rows, correcting the error prediction to some extent in the 2th,4th,6th,7th,9th,10th,13th, and 15th rows, and further improving accuracy of prediction in the 14th row.

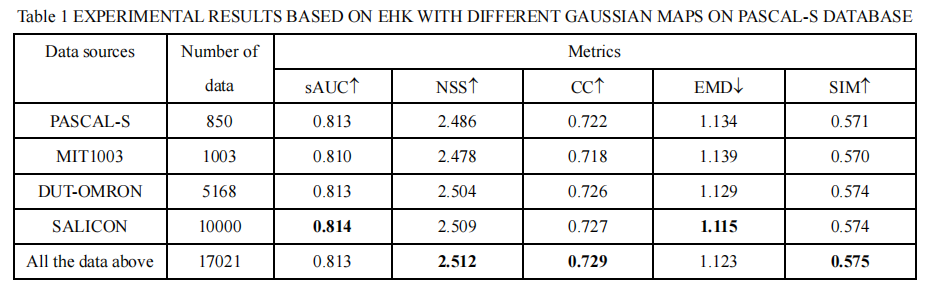

4. Evaluate the influence of different gaussian map

Since the center prior is a common property of visual saliency mechanism, gaze fixations are biased toward the center of natural scene stimuli in all the databases. Given a standardized image size, supposing that the saliency maps in different databases are sufficiently large, the Gaussian maps leant from them should be the same. It is attributed to the statistically consistent behaviors of the human vision system. Therefore, as the largest database of visual saliency, the SALICON database is used to calculate the Gaussian map in this work. In our experiments (See Section III in the manuscript), the testing is performed on another two databases, i.e., PASCAL-S and MIT1003, instead of SALICON. The remarkable results of the proposed method imply the generalization of the learnt Gaussian map.

Furthermore, it would be interesting to see the results if we estimate the Gaussian map from other data sources. We show these results on the PASCAL-S database in Table 1, where the number of saliency maps used to estimate the Gaussian map is also provided. As shown in Table 1, we found the overall trend is that the more data are used to calculate the Gaussian map, the better performance can be achieved. When the data source is sufficiently large, the performance becomes stable. Concretely, the results from the SALICON-derived Gaussian map are on par with those from the all-data-derived Gaussian map. Therefore, we can conclude that using SALICON to estimate the Gaussian map is adequate.

5.Algorithmic level comparison among different Models

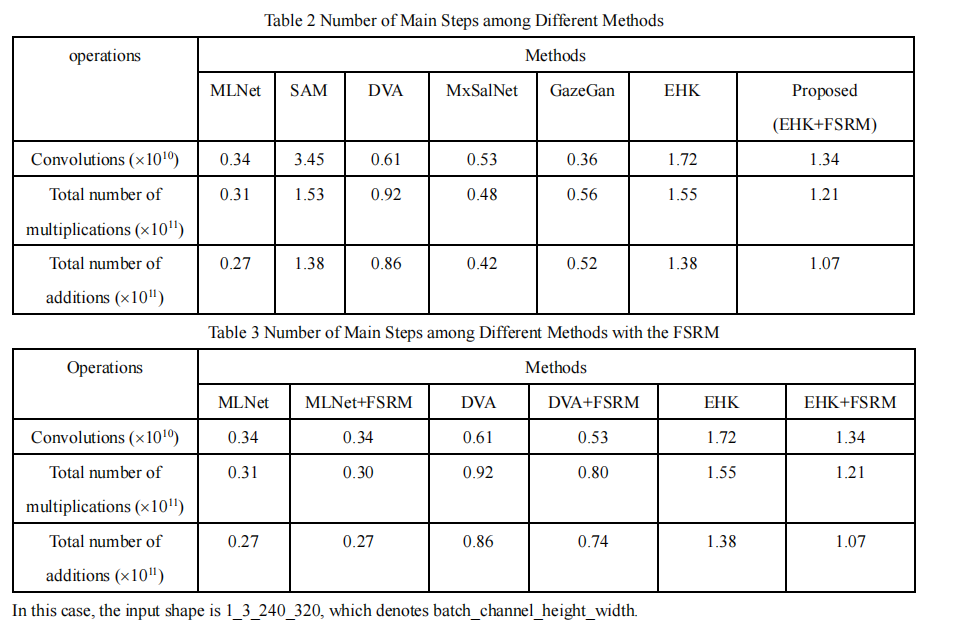

×According to the work of David Marr on vision [X1], a full analysis of an information processing system involves three levels: computational, algorithmic, and implementational. The computational level describes the information processing problem to be solved. The algorithmic level describes the steps that need to be carried out to solve the problem. And the implementational level deals with physical realization of the system. Based on these terminologies, we compare the algorithmic steps of different saliency prediction models. As shown in our manuscript, the most competitive models are based on deep networks, where the main steps include the operations of convolutions, full connections (i.e., matrix transformation), and activation functions (e.g., ReLu and sigmoid). In particular, the number of convolution operations is much larger (not in the same order of magnitude) than the other operations. Therefore, we only perform a comparison between our model and competitors based on the number of convolution operations, as shown in Table 2. In addition, given a convolution kernel of size k*k, one convolution contains k*k multiplications and k-1 additions without considering the bias. Thus, we can further calculate the total number of multiplications and additions. From Table 2, we can see that SAM contains the most algorithmic steps, and MLNet contains the least steps. The EHK model also contains a lot of convolutions. However, compared with EHK, our proposed model reduces the number of convolutions about 3.8*109, which is about 22% reduction. This benefits from the feature selection of FSRM. In the proposed model, the number of feature channels is halved by each FSRM, greatly reducing the amount of convolutions. The reduction in the number of operations can also be reflected in the total number of multiplications and additions. Moreover, we perform another comparison in the other hierarchical fusion model including MLNet and DVA by adding FSRM, as shown in Table 3. From Table 3, we can see that the number of operations is effectively reduced by adding the proposed FSRM in the MLNet and DVA.

[X1] D. Marr, Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. San Francisco, CA: W.H. Freeman, 1982.