Abstract

In this paper, a method for detecting banding artifacts in high-dynamic-range (HDR) videos is proposed. The proposed method is the first banding detector designed for HDR videos. To effectively detect banding artifacts, we propose a multi-scale banding detection strategy based on multiple visual clues. Specifically, in each scale, the visually perceptual effects of banding artifacts are measured sequentially by luminance, edge strength, and edge length clues. All these clues are exploited by considering the properties of the human vision system and HDR videos. Experimental results show that our method can effectively detect banding artifacts and provide banding visibility maps that highly match the human visual perception.

More details about the work:

1. Detail of the proposed model.

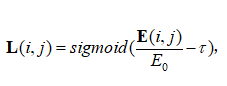

Fig. 1 Generating a visibility map of banding artifacts at one scale.

More details about Gaussian pyramids, luminance clues and length clues

(1) In the Gaussian pyramid processing flow, the original image serves as the first layer of the Gaussian pyramid. We use a Gaussian filtering on the original image and then down-sample it twice as the second layer of the Gaussian pyramid. The second layer is further blurred by the same Gaussian filter, and then down-sampled by a factor of two as the third layer of the Gaussian pyramid. The subsequent banding detection is performed in each of the three pyramid layers.

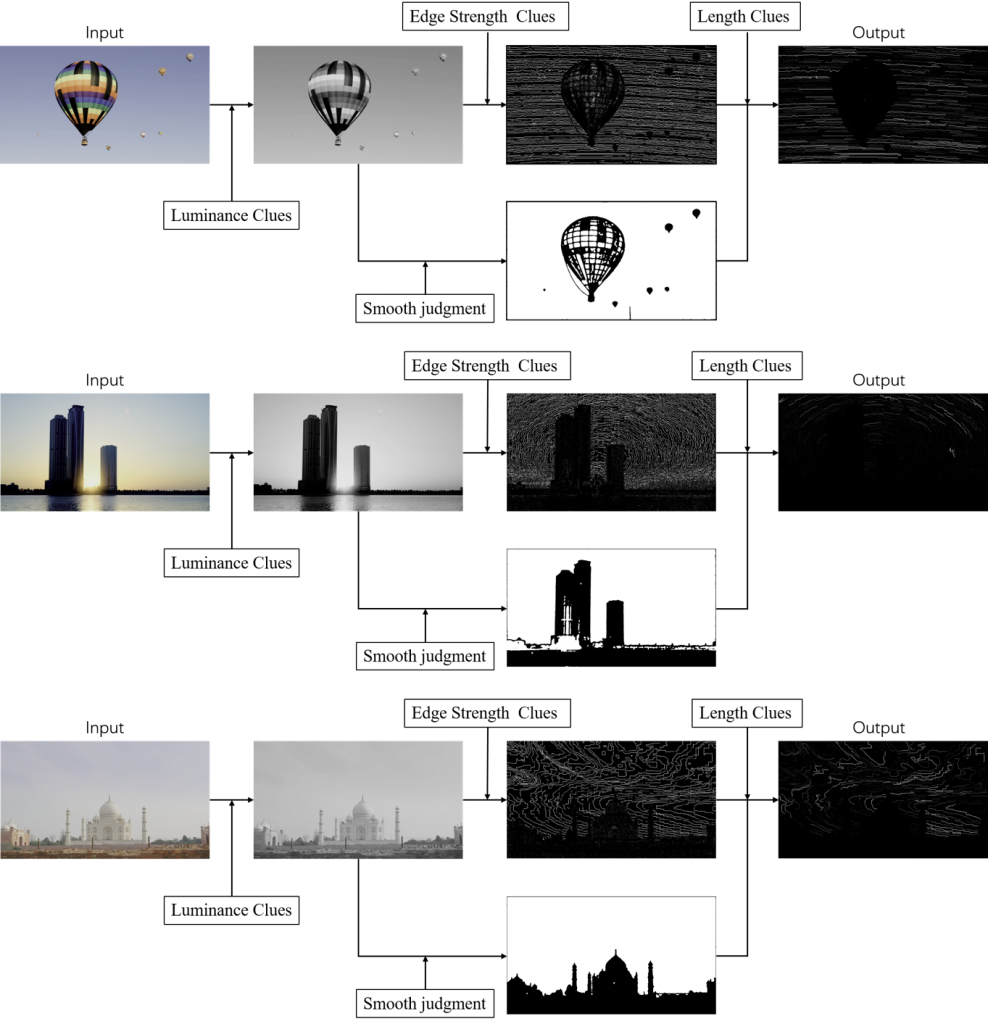

(2) The usage of the luminance clue is shown in Equation 1 of the manuscript. Firstly, the data of HDR video is converted into the luminance displayed by the display using the electro-optical conversion function (EOTF) of the display. In HDR video, this function is the PQ function, which is defined in [10] in paper. The luminance displayed by HDR videos is converted into the perceived linear luminance that matches the human visual response according to the human visual system’s response characteristics, thus serving as the luminance clues. The perceptual uniformity (PU21) curve is specifically defined in [9]. The length clues are defined in equation here:

where parameters E0 is based on the characteristics of the human visual system, depending on the display parameters and the distance from the display when the person is watching the video. And the value of τ depends on E0 and is used to adjust the range of action of the sigmoid function on the edge length. The relationship between pixel length and physical length can be obtained by dividing the diagonal pixel length by z inches. Thus, we can calculate the shortest banding edge length in pixels by:

where Emin is the observed shortest perceptible banding edge length in pixels. E0 takes the value of Emin calculated by the corresponding viewing condition.

2. More discussion and comparison about the experiment

Fig.2 More experimental effects are shown, from left to right, as input, brightness, gradient, and banding visibility maps

Fig. 3 Illustration on the intermediate results

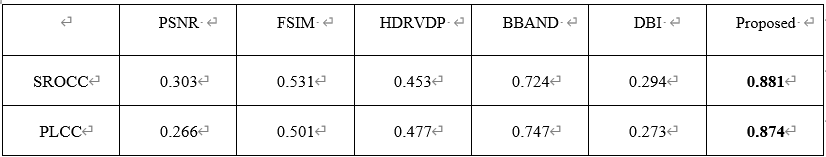

Table 1 Performance comparison of metrics against subjective scores.

In addition to the quantitative results (SROCC and PLCC) in Table 1 and the visual results in Fig. 4, we analyze the scatter plots and showing some intermediate results in each component of the proposed detector.

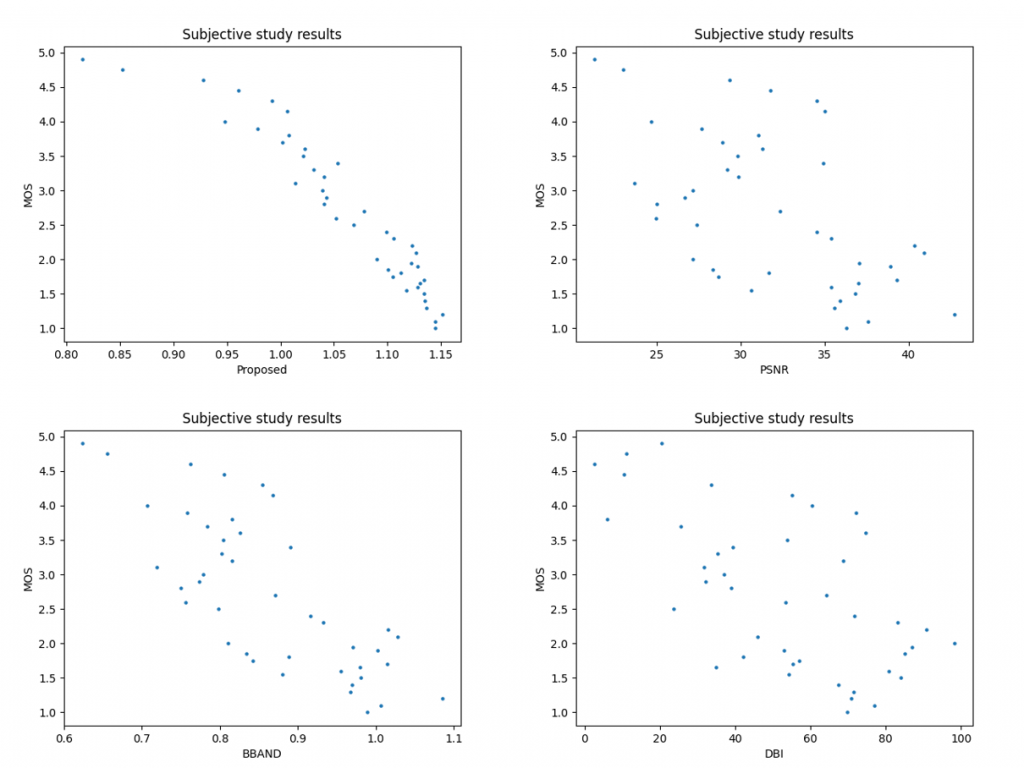

The scatter plots are shown in Fig. 4. We can see that our banding index provides highly negative correlation with the MOS. In contrast, the PSNR, BBAND, and DBI are poorly correlated with the MOS. It demonstrates the effectiveness of the proposed detector in assessing HDR banding. It is mainly attributed to the differences between HDR and SDR videos in lots of properties, including dynamic range, color gamut space, bit depth, and encoding curves. Thus, it is difficult for the SDR banding detectors can well work on HDR videos. Instead, the design of our detector is based on the characteristics of HDR videos, and thus is well suited for banding detection of HDR videos.

Fig. 4 Subjective study results

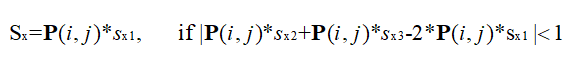

(1) On the smooth region judgment, since the banding artifact edge is a banding artifact, we first used the Sobel operator and its multi-scale variants for the edge extraction:

where sx1 denotes the horizontal Sobel operators, sx2 and sx3 are multiscale Sobel operators, and the same for the vertical direction. Based on these operators, we can use multi-scale matching to ensure that the regions on both sides of the banding are smooth regions. The following is the details:

where denotes the input map, |·| outputs the absolute values of each element in its argument. This formula implies that a pixel is defined as a candidate banding pixel only when the area on either side of the banding is a smoothed area.

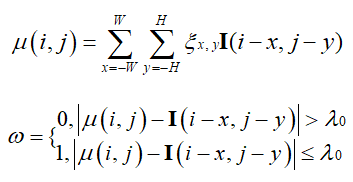

Further, the smooth region is judged using the difference between the Gaussian mean value of the surrounding pixels and the value of that pixel, and the pixels smaller than a certain threshold are regarded as smooth region pixels. The details are as follows:

where ξ(∙) is a 2D Gaussian weighting function, (i, j) spatial coordinates in the input map I, ω denotes the smooth region map, and λ0 is the threshold to discriminate between smoothing and non-smoothing, and we take it to be 1.

Perhaps there may be false detections in some regions, but they will be excluded by other clues of banding artifacts. In our experiments, this smooth judgment can well detect the location of banding pixel, which is computationally effective and can meet our demands.

(2) Similar to the edge detector, we do not wish to exclude other solutions of smooth region detection in the proposed framework. Some examples of our smooth judgments are in Fig 5 Based on these operators, we can use multi-scale matching to ensure that the regions on both sides of the banding are smooth regions. The following is the details, which demonstrate its effectiveness. Since the focus of this manuscript is not on smooth region detection, we do not investigate different smooth discriminators.

Fig. 5 Illustration on the extracted smoothing areas (in white)

Ourdataset:

link:https://pan.baidu.com/s/1WbLymdyx1xxeYKbfR-5Yag

Extraction Code:hofq